Proceedings of THE THIRD ANNUAL RALUT SENIOR SCHOLARS SYMPOSIUM

Massey College

University of Toronto

April 10, 2008

Edited by Cornelia Baines

Toronto Retired Academics and Librarians of the University of Toronto (RALUT) 2008

Contents

Introduction

The Presenters

The US Hegemon and its North American Periphery

Policies That Value Water: An Overview

G8 Accountability: THe Civil Society Effect

Interest and its Link to to Self-Regulation

Screening for Cancer: Are We chasing an Elusive Dream?

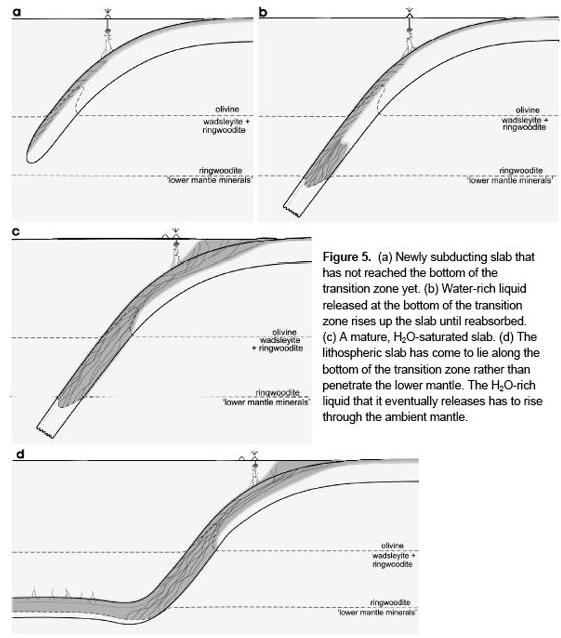

Planet Earth's Deeper Water Cycles

On The Years of the Highest High and the Lowest Low Daily Temperatures

Antarctica and Human Biology

For the second time, RALUT is publishing papers presented at its annual Senior Scholars’ Symposium. These proceedings include eight papers presented at Massey College on April 10, 2008.

The range of topics and the academic excellence that the papers reveal are impressive, illustrating well the ongoing contributions of retirees to the academic life of the university in particular and intellectual life in general.

The symposium was initiated in 2006 by RALUT's Senior Scholars' Committee chaired by Germaine Warkentin. That it has been so successful is a tribute to the organizing subcommittee which in 2008 included John Dirks, Merrijoy Kelner and John McClelland.

But RALUT must also express gratitude to those who agreed to speak! Doing so meant that they were obliged to submit manuscripts so that we could publish them in the proceedings. As a result they had to put up with editorial demands for clarification and references. They did this with very good grace and for that they deserve many thanks.

Furthermore we were honoured that The Honourable Adrienne Clarkson agreed to be our featured guest and that we could enjoy her reminiscences of university life.

Finally RALUT must acknowledge our good fortune in being able to hold these symposia in the Upper Library of Massey College where not only intellectual exchange thrives, but also a wonderful ambience can be enjoyed. As current chair of the Senior Scholars' Committee and so-called editor of the proceedings, I am obliged to say that without the expertise of Ken Rea, we would have no proceedings. Now we can only hope that our readers will enjoy the fruits of our labours.

Cornelia Baines

Stephen Clarkson:: Departments of Political Science.

Lino Grima : Centre for Environment & Geography.

Peter I. Hajnal : Faculty of Information Science.

Suzanne Hidi : Department of Laboratory Medicine and Pathobiology.

Anthony B. Miller : Department of Public Health Sciences, Faculty of Medicine.

Pierre-Yves Robin : Deparment of Geology..

John W. Senders : Faculty of Applied Science and Engineering.

Becky A. Sigmon : Department of Anthropology.

Stepen Clarkson

Introduction

At a time of military failure abroad and economic collapse at home, questions about US power are being asked with new urgency. While commentators in the media have little trouble pointing out that the emperor has no clothes, the discipline of international relations (IR) is largely stuck in a one-sided view of the United States which focuses on its six decades of global dominance.

The voluminous IR literature on the United States has two prime characteristics. US power is measured quantitatively to compare it with that of its actual or potential rivals. It is also discussed instrumentally in terms of whether it is exercised unilaterally by American decision-makers or multilaterally within international organizations. In either case, US power has been largely taken as a given, the independent variable whose massive material assets explain its capacity to shape events around the world. Understood as the master of its own capabilities, Washington's problem is presented as how to apply them: either exercising its will by imposing its hard power or working collaboratively using its soft power.

I am proposing to invert IR's default mode by establishing a matrix to determine how the United States' international partners contribute to (or constrain) its international efficacy. Whereas IR literature has traditionally focused on the United States as the international order's subject whose massive material assets explain its consequent capacity to affect the world outside its borders, my approach considers the United States as object: that is, how its present power is a function of the substantial control it has acquired over other states' resources in the past and of its capacity to mobilize their moral and material support in the present.

As I am more a scholar of North America's political economy than an analyst of international relations as a whole, my inquiry's principle interest is to answer a new question about the continent: How does the United States' immediate periphery, Canada and Mexico, contribute to or constrain American power? This query can be restated by posing the counterfactual question, “In what ways and to what degree would US power be reduced (or enhanced) if Canada and Mexico did not exist on its northern and southern borders?” To answer this question I propose to address three related propositions that explore the three major vectors of US power – material assets, relations, and global structures. My ten cases will provide probes into the most salient issues relevant to each proposition so that an interested public in each of the continent's three countries will have a transformative understanding of North America's geopolitical dynamics.

US Material Power: Proposition A. In terms of their economic, human, and resource contributions, Canada and Mexico are the most important external sources of US material power. This first part of the study will evaluate four dimensions of the periphery's contributions to US economic strength.

1. Aggregate trade and investment data will be marshalled to identify how much the two peripheral economies contribute to US GDP, e.g. by expanding its market, creating jobs, and so raising its size and productivity.

2. Sectoral production networks. Case studies in a key industry (steel), a vulnerable manufacturing sector (automobiles and car parts), and a central services sector (banking) – will illustrate how the periphery can bolster and limit US corporations' scope and success.

3. Labour flows. Migration data will document how well-trained Canadian brains and low-cost Mexican brawn provide a flexible labour supply to meet US needs.

4. Energy and resource supplies. The contribution to US resource security and stability of oil, gas, and electricity flows from the periphery will be documented.

US Relational Power: Proposition B. Geography makes Canada and Mexico the chief providers of US border security, but history caused their support for US military power to diverge. Since US hard power is a function of American defence capacity, this part of the study will consider the periphery's participation in (or abstention from) three facets of US relational power.

5. Border security. The periphery's role in providing American security will emerge from assessing a range of smart-border initiatives introduced since 2001.

6. North American defence. Canada supported and Mexico resisted contributing to US continental defence through cooperation with the Pentagon's Northern Command.

7. The US war on drugs. Canada's lesser and Mexico's greater roles as producers and conduits of addictive drugs for the enormous American market are contradictory. While drug cartels arguably undermine US power, the two governments have cooperated with Washington to restrict these suppliers thereby buttressing US power.

US Structural Power: Proposition C. Canada's and Mexico's multilateral contributions to building the norms of the world order have had both intended and unintended effects on US structural power. This final part of the study will investigate three ways that the periphery impacted the United States' structural power through constructing global governance regimes which in some cases project and in others restrict its influence overseas.

8. Global multilateral regimes. With the International Criminal Court, the Responsibility to Protect doctrine, and UNESCO's convention on cultural diversity, Canada led an effort to forge governance norms that might constrain the United States while Mexico was reluctant. Within the effort to construct a global climate change regime, Canada supported the Kyoto accord at first but then buttressed Washington's efforts to undermine this embryonic international regime.

9. International diplomacy. Mexico took the lead in resisting US intervention in Castro's Cuba and opposing the Reagan administration's militarization of civil conflicts in Central America, thereby buttressing an international structure centred on the norms of sovereignty and non-intervention. To this end, Canada supported Mexico's Security Council efforts in early 2003 to resist US efforts to have the United Nations endorse a military attack on Iraq.

10. International economic order. In contrast, Canada supported and Mexico resisted the post-World-War-II construction of multilateral economic institutions that framed US global hegemony. Canadian and Mexican negotiation first of continental free trade and then their own bilateral trade and investment treaties propagated economic norms deepening US structural power.

Relation to my previous work

When I left Sovietology behind, my primary interest became the political economy of Canada, in particular the various dimensions of the country's integration in a North America then made up just of the United States and Canada. When, in the mid-1990s, NAFTA created a new world region, my teaching and research extended to embrace this continental governance in the context of the global structure created by the WTO at the same time. My present project will complete a trilogy of large studies that I have conceived on relations within this new North America. Uncle Sam and Us: Globalization, Neoconservatism, and the Canadian State took Canada as the dependent variable by examining how exogenous forces (globalization) and endogenous change (neoconservatism) affected the Canadian state's policy capacity. In October 2008 I published a broad study (made possible by my last SSHRC grant) with the University of Toronto and the Woodrow Wilson Presses – Does North America Exist? Governing the Continent after NAFTA and 9/11– which takes North America as the dependent variable by looking at how US, Canadian, and Mexican actors generate transborder governance. My new project will culminate over a decade's work on North America by taking the USA as the dependent variable.

In reframing North America's space in terms of its political-economy realities, it is imperative to understand Mexico's part in the new North America in which it plays an important, if not always optimal role. For linguistic as well as logistical reasons, much scholarship on North America that is knowledgeable about Canada fails to appreciate Mexico's unique effects. This is why I have spent a month or two each winter for the last three years in some of Mexico City's best research centres to make my work sensitive to that country's reality and why I will need to return there as my work proceeds.

With US power now being challenged economically, militarily, and normatively, blithe assumptions about American supremacy are no longer analytically useful. These empirical studies, which will show how US power is constructed and constrained by its neighbours, should prove relevant to both the academic and policy-making communities.

Academia. This study's expected contribution will be to develop the notion that US power is not a finite given but is, in part, generated from outside the United States' territorial confines. Once successfully applied to Canada and Mexico, this methodology could be extended and adapted to other countries in order to establish a more complete inventory of the external sources of US power. Closer to home, the very substantial literatures on Canada-U.S. and the Mexico-US relationships have each tended to take its particular dyad as a “special” – putatively unique – international relationship. Only recently have efforts been made to compare Canada's US relationship with that of Mexico's. The comparative study of Canada and Mexico is a new research niche which opened up with the signing of NAFTA. My project will reframe this new literature comparing the two US neighbours by looking at them as agents instead of objects in intergovernmental relations on the continent.

Policy-makers. I hope that this study, which coincides with great intergovernmental instability, will contribute to policy makers' rethinking old continental realities in Washington, Ottawa, and Mexico City. Insight into the components of US power is clearly necessary at this historical juncture when the United States is no longer sitting, unchallengeable, at the pinnacle of power in the international system but is increasingly dependent – as Barack Obama has frequently pointed out – on its partners' support, both material and moral. For instance, by specifying how the continental periphery contributes to US power, my findings on North America's intergovernmental supports should help Canadian and Mexican leaders decide how to distribute their power resources between the continental and the global levels as their two countries balance centrifugal corporate pressure for continental regulatory integration against the centripetal political needs for autonomy and decentralization.

While it is commonly believed that Canada and Mexico matter very little to Washington as it faces far more urgent global challenges, there are significant links between the continental and the global which contradict that assumption. With Indian and Brazilian companies buying large chunks of the Canadian steel and mineral industries and with China wanting entry into the Mexican petroleum economy, US policy makers must interrogate their assumptions about the United States' assured access to the continent's resources.

Summary

Since the United States' invasion of Iraq, its pre-eminence in the international system is being re-examined in terms of its hard military capabilities. The collapse of its financial institutions has re-animated discussions about the extent of United States' soft global power. A striking aspect of this debate is how little attention is paid to the way in which US power is constituted. Apart from reports that assess the US economy's dependence on foreign providers of strategic raw materials – most importantly petroleum – the literature on US hegemony has been built on the implicit assumption that Washington is the sole creator of its own capabilities. Academic and policy studies generally examine the extent of US power and how it is best applied abroad, without considering the extent to which it derives from external sources.

Over the past eight years I have been working on North America's political economy by asking to what extent it has become a world region in any way similar to the substantial entity known as the European Union that has been created over the past 50 years. In my new work I am inverting the way that US power is generally discussed by considering to what degree Canada and Mexico construct (or constrain) the United States, either because of their geographical contiguity or their unique socio-economic characteristics. Integrating both international relations and political economy approaches, I am using ten case studies to assess the complex ways in which the United States' strength derives from its continental periphery. These probes, which range from energy supplies to international legal norms, will explore three related propositions.

1. Through their economic, human, and resource contributions, Canada and Mexico are the most important external sources of US market power.

2. Canada and Mexico provide crucial border security to the United States, though they have played a more mixed role in supporting US military power.

3. Uncle Sam's two neighbours have helped build the norms and international structures that support US economic power but potentially circumscribe US global power militarily through the International Criminal Court and culturally at UNESCO.

Beyond the academic goals of reassessing the sources of American power and advancing comparative political-economy scholarship in Canada and Mexico, this research promises to have some intriguing implications for policymakers.

In sum, I am hoping to contribute both to academics' conceptualizing and policy makers' understanding of the new realities of post-NAFTA, post-9/11 North America and of the special role played by Canada and Mexico in constituting American power in the present conjuncture when the global hegemon's leadership is under strain.

Policies That Value Water: An Overview A. P. Lino

Grima University of Toronto

Centre for Environment & Geography

Introduction

Canadians need to re-think their perception of plentiful water and more importantly, we need to reconsider our profligate use of water, especially in the context of global warming The average per capita municipal water use in Canada is 622 liters per day, more than double the average in European cities (1,2). To some extent Canadians' misperception of water abundance is understandable: Canada has about 7% of the earth's land surface, about 7% of the earth's renewable freshwater but only 0.5% of the world's population (3,4). However, most of our rivers flow north, away from the thin ribbon of settlement at the 49th parallel and there are spatial and temporal variabilities that leave some parts of Canada, such as the Prairie Provinces, vulnerable to serious and recurring droughts. These recurring shortages will be exacerbated by global warming and the vast amount of water required to produce more energy from the Tar Sands projects in Alberta (5).

The highly significant economic benefits that water provides for our industry, cities, hydro-power generation, irrigation, forestry products and tourism are amenable to quantification but the techniques are controversial, because water is only one input to economic production and it varies widely over time and space (6-8). Other benefits related to ecological services (e.g. maintaining wetlands) are harder to quantify (9).

Canadians' profligate water use may be due to a perception of abundance rather than an indication that Canadians do not value our water inheritance. However if our water use pattern is:

one then must conclude that Canadians collectively either do not value our water inheritance or have failed to develop appropriate water policies.

This paper briefly outlines some policy options that demonstrate that the community values its water, even as it confronts the twin imperatives of ensuring sustainability while exploiting our comparative economic advantage accruing from plentiful water. These policies are likely to become more salient as population size increases, living standards rise, water-demanding energy projects proliferate, climate warming reduces runoff and conflicting demands (e.g. for irrigation vs. industry) increase.

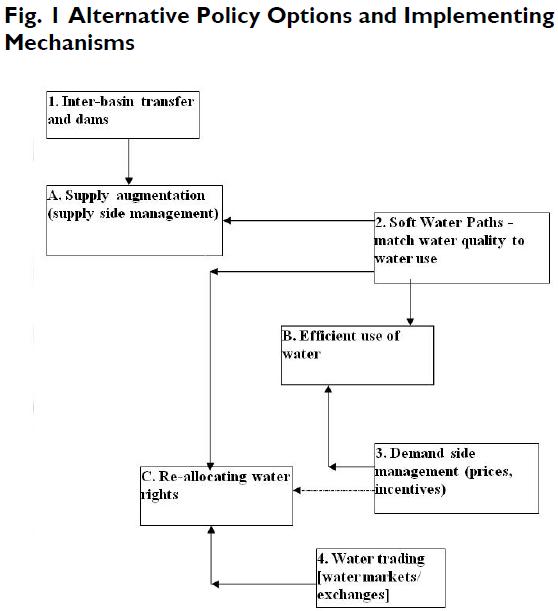

All communities need to develop effective policies to cope with water shortages, increasing demand and competition such as for farming vs. tar sands projects in Alberta. Failing that, policy options such as restrictions on irrigation or urban water services become inevitable. Given initial (current) water uses, rights and institutions, Figure 1 lists three broad coping options: (A) supply augmentation; (B) more efficient use of the available water; and (C) re-allocation of water-use rights. Figure 1 links each option to one or more of four policy mechanisms.

Supply Augmentation

Supply augmentation or supply-side management (option A in Fig. 1) has been the conventional way to cope with real or perceived shortages, not only in the case of water but also in other natural resource and infrastructure issues such as electricity, petroleum, roads, and solid waste management. In spite of increasing financial cost and negative environmental and social impacts, supply augmentation is very appealing. It facilitates "business as usual" by giving more of the same to business and individual consumers without asking for changes in wasteful habits reinforced by past growth, profits and convenience. And as long as it works, it is likely the best route to take, with the proviso that the users of the water or electricity or roads or gasoline face the full cost of producing the service that they use. Most of the time there are environmental and social costs of such services, such as air and water pollution; displacement of population when dams are built; noise; and global impacts such as the destruction of the ozone layer by chlorofluorocarbons and the increasing concentrations of carbon dioxide in our atmosphere. Economists refer to these costs as externalities, i.e. negative impacts that are not paid for by the beneficiaries of the activities that cause these impacts.

Increased Efficiency

In the case of water, the most conventional way to increase supply is by increasing storage of run-off in dams to produce hydro-electricity, provide urban water for residents, commerce, industry and farming, enhance recreation opportunities, and reduce flood damage. Other modes of supply management are inter-basin transfers via pipelines (e.g. the London, Ontario, pipeline from Lake Huron), drinking water treatment plants and storage towers in cities. In addition to the need to address the externalities of such projects, 20 developing new water sources is likely to become increasingly expensive because the more accessible and least expensive sources have very likely been exploited already. “Business as usual” is not a habit likely to be given up easily in our societal context where engineering works are generally considered manifestations of progress and civilization. However increasing economic, social and environmental costs of supply-management will make other options for coping with water shortages more appealing.

Increased Efficiency One can increase the efficiency of conventional water resources (option B in Fig. 1) in two ways: the use of demand-side management incentives (policy mechanism 3 in Fig. 1) and the use of the newly emerging water soft path (policy mechanism 2) which is defined below.

The literature on water demand management goes back to the late 1960s and early 1970s, largely as a response to wasteful municipal and irrigation water uses (10,11). For example, municipal water users typically face low water rates. And in a well functioning economic system, one would not argue with affordable, low cost water where the full costs of supply are covered by users. For the past five decades the consensus has been critical of water rates that do not reflect correctly the full cost of water, and therefore give incorrect signals to consumers and encourage wasteful uses. (For useful reviews see 12, 13.) For example many cities in Canada and the US do not meter individual homes (3) and none meter individual units in high-rises. In this case the consumers are given the signal that it does not matter how high or low their water use is – certainly not a signal to use water wisely and avoid waste!

Nearly all municipalities in Canada have declining block water rates, i.e. the first block of water use has a higher per unit cost than the next, thus the unit cost of water decreases as residents use more water in their homes and gardens. Although this is better than an unmetered water service, it does not provide a compelling incentive for the consumer to reduce water use. Municipal water rates should accurately reflect the increasing cost of supplying water to the user. This could be accomplished by increasing the block rate, i.e. increasing the unit cost with increased volume. This approach also makes it possible for low-income consumers who are not likely to demand a lot of water, to enjoy a basic water service at the lowest rate.

In addition to more appropriate water rates, public education, full cost pricing, labelling large water-using appliances such as washing machines, and changing building codes to require low-flow toilets and showers are other policy options to increase water-use efficiency. The draft changes in the Ontario Permits to Take Water (pursuant to the Ontario Clean Water Act and the Safeguarding and Sustaining Ontario's Water Act) go even further by requiring municipal water audits.

A second way to increase the efficiency of conventional water resources is to adopt water soft policies to meet increasing water demands (policy mechanism 2 linked to options A and B in Fig.1). The water soft path is analogous to the soft energy path (14). With this approach we match water quality to its proposed use. For example, water for cooling in industry, flushing toilets or irrigating golf courses does not need to meet potable standards. Water soft policies focus not so much on the resources (in this case water) but on the service that the water is meant to satisfy. Matching the service with a quality of water broadens the range of policy options from the conventional engineering approach of building more dams and pipelines and digging deeper wells to making better use of the available water. Thus water soft options can increase the amount of water available for use. If gray water is used to water gardens and golf courses instead of being piped to the sewage treatment plant, the amount of water available to the municipality is increased without the need to build yet another dam or dig another well.

Conventional water planning recognizes that water soft path options increase the efficiency of conventional water. However there is another conceptual component of water soft analysis that sets it apart from conventional 'efficiency' approaches. As Brooks (15) notes, the water soft path approach turns “typical planning practices around… Instead of starting from today and projecting forward, start from some water-efficient future and work backwards (“backcast”) to find a feasible and desirable way between that future and the present.” The future in this approach is “normative in the sense that environmental sustainability plus social and economic equity” are not just desirable but required objectives. Sustainability and equity become central to the analysis. This hitherto under-tilled field holds great promise for both research and application.

Re-allocating water rights (Option C)

Standards, regulations and guidelines restrict the use or disposal of water; failure to comply carries the threat of prosecution. Prices, charges, taxes, subsidies and similar economic incentives (positive or negative) impose specific costs on the use or disposal of water but, unlike regulations, offer more flexibility to users of water. For example, one could pay more to use more water or even pay to have others clean up ones pollution. Under regulation and economic incentives, rights to use water may be limited, crimped, and made more costly. In contrast, another policy is to re-allocate the right to use water among users.

There are two legal principles for allocating water rights in North America. In the eastern and wetter half of North America, the customary English “riparian right” applies. Under this legal regime, the owner of land fronting a river has to allow the flow to the downstream neighbour undiminished in volume and quantity. The “riparian right” of the neighbours imposes a riparian obligation on each water user. Government may issue permits-to-take-water, which are not transferable and may be changed or revoked at the discretion of the appropriate agency.

In the western part of the U.S.A. and Canada, where water is clearly limited, the doctrine of “prior appropriation” prevails. Under this legal regime, the government allocates water rights on a “first-in-time, first-in-right” basis. The water rights are most often linked to land ownership. This legal regime for water rights was crucial in attracting settlers to the frontier. A common feature of prior appropriation is that if the water is not used, the allocation is lost, a kind of “use it or lose it” approach – hardly an incentive to reduce ones water use! To provide an incentive to use water more efficiently, some jurisdictions (e.g. in the Murray-Darling basin in Australia), allow owners of water rights to trade their rights to other users through a water rights exchange, analogous to the financial stock exchange.

Under a regime of prior appropriation, banning trading does not provide an incentive to increase water-use efficiency because there is no market for any “saved” water. If trading water rights is allowed, water is used more efficiently by the entry of junior rights owners and the general adoption of more efficient technologies that create the “saved” water in the first place. However water use does not necessarily decrease when water trading is allowed. Whether the total water use increases or not depends on the added demand of the new water rights holders. For example, in southern Alberta, water use may increase if oil companies buy quotas from water-rights holders who were not fully using their quota (5).

The costs associated with trading water rights include the administrative costs of legally defining water rights and of monitoring and enforcing trades; and infrastructure costs required by an expanded network of water users. Gains include the value of the new economic activities of the new users of the traded water. Gains are more likely to exceed costs when water is scarce and therefore more valuable (16). Examples of formal water trading on water rights exchanges are not many but there are functioning markets in the Central Valley of California, Chile, South Africa and the Murray River Basin of New South Wales (17, 18).

Challenges in Implementation

As noted in the Introduction, computing the value of water is very challenging, partly because it has so many different uses and its quality and quantity vary over space and time and partly because there are so many personal preferences (e.g. sustaining wildlife vs. golf) to take into account. A more pragmatic approach is to focus on three overlapping policy questions:

While the value of water to society is crucial and evident, optimal policy mechanisms are often not simple, transparent, equitable or efficient because water is the most common commodity almost everywhere and it is easily taken for granted. As a result, even basic information on water supply and use is often not available. For example, 1996 is the last year for which water use data across Canada has been published. Strong support for water supply and demand data and research are basic to a much needed federal interest in developing a Canadian water strategy (19).

Another challenge is that a one-size-fits-all approach does not work well. The best approach is to base each decision on detailed research on the individual case. For example, some municipalities have tried to reform their water rates with insufficient information about consumers' price response and were faced with declining demand to the point that their total revenues fell short of expenditures. Part of the cost of implementing reform is the cost of developing detailed information on a case-study basis.

Economic conditions and water supply/demand circumstances change and information is often missing. Therefore it is advisable to espouse 'adaptive management' (20) in water policy reform implementation. Adaptive management is based on (a) systematic monitoring of the effects of the implemented policy (b) continuous striving for outcome evaluation and (c) changing policy based upon the updated information. It involves adopting a science-based policy mechanism with the realization that the policy maker does not have complete information about how the policy will work out but is brave enough to find out and committed to learning from the process.

Al Gore's prognostications in his book Inconvenient Truth (21) will, one hopes, not become reality. However it is best to be prepared for the day when policy makers and legislators have to decide who will have the right to access a water resource, under what constraints, for which purpose, at what time and for what price. The policy options presented in this paper have been adopted in other jurisdictions, where progress has not been uniformly smooth but encouraging. It behoves us to learn how demand-side options and water soft paths work out and how they could be applied in Canada, where supply-side management is still the dominant, conventional policy response to real or perceived water scarcity.

Acknowledgements: The Atmospheric and Climate Research Directorate, Meteorological Services Canada, financially supported research on the international experience in policy options that reflect water values. Olivia Wong, a co-op student in Environmental Science at the University of Toronto Scarborough Campus, was a very productive research assistant. Rodney White, Heather Auld, Don MacIver and Grace Koshida made many constructive suggestions. The author is responsible for all observations and conclusions.

1. Environment Canada (2004). Municipal Water Use Report – Municipal Water Use: 2001 Statistics.

2. Brandes, O., K. Ferguson, M. M'Gonigle, C. Sandborn (2005). At a Watershed: Ecological Governance and Sustainable Water Management in Canada. Victoria, B.C.: Polis Project on Ecological Governance.

3. Environment Canada (2004). Threats to water availability in Canada. Burlington, Ontario: National Water Research Institute NWRI Scientific Assessment Series No.3 and ACSD Science Assessment No.1.

4. Sprague, J. (2007): 'Great Wet North', in Bakker, K. (ed.), Eau Canada: The Future of Canada's Water. Vancouver: UBC Press.

5. Alberta Environment (2003). Water for Life: Alberta's Strategy for Sustainability. Edmonton: Alberta Environment.

6. Grima, A.P. Lino (2006). “Will Canada's Well Run Dry?” idea&s (the arts & science review) vol. 3, number 1, Spring 2006, 42-43.

7. Aguelo, J.I. (2001). The economic valuation of water: principles and methods. Value of Water Research Report Series No.5. Delft: International Institute for Infrastructural, Hydraulic and Environmental Engineering [IHE].

8. Turner, R.K., Pearce, D. & Bateman, I. (1993). Environmental Economics: An elementary introduction. Baltimore: The Johns Hopkins University Press.

9. Freeman III, A.M. (1993). The measurement of environmental and resource values: Theory and methods. Washington, D.C.: Resources of the Future.

10. Hirshleifer, J., J. DeHaven and J. Milliman (1960). Water Supply: Economics, Technology and Policy. Chicago: University of Chicago Press.

11. Grima, A. P. (1972). Residential Water Demand: Alternative Choices for Management. Toronto: University of Toronto Press.

12. Brooks, David B. and Roger Peters (1988). Water: The Potential for Demand Management in Canada. Ottawa: Science Council of Canada.

13. Tate, D. M. (1990). Water Demand Management in Canada: State of the Art Review. Ottawa: Social Science Series No. 23, Inland Waters Directorate, Environment Canada.

14. Lovins, Amory B. (1977). Soft Energy Paths: Toward A Durable Peace. Cambridge, Mass.

15. Brooks, David B. (2005). Beyond Greater Efficiency: The Concept of Water Soft Paths, Canadian Water Resources J. vol. 30 (1) 83-92.

16. Zilberman, David and Karina Schoengold (2005). The Use of Pricing and Markets for Water Allocation, Canadian Water Resources Journal, vol. 30 (1), 47-54.

17. Haddad, B.M. (2000). Rivers of Gold: Designing Markets to Allocate Water in California. Washington,D.C.: Island Press.

18. Horbulyk, T.M. (2007). Liquid Gold? Water Markets in Canada in Bakker, K. (ed.) EauCanada. Vancouver, B.C.: UBC Press, pp. 185-204.

19. Morris, T.J., D.R. Boyd, O.M. Brandes, J.P. Bruce, M. Hudon, B. Lucas, T. Maas, L.Nowlan, R. Pentland, and M. Phare (2007). Changing the Flow: A Blueprint for FederalAction on Freshwater. Ottawa: The Gordon Water Group of Concerned Scientists and Citizens.

20. Holling, C.S. (ed.) (1978). Adaptive Environmental Assessment and Management. London: John Wiley & Sons.

21. Gore, Al (2006). An Inconvenient Truth: The Crisis of Global Warming. New York: Rodale and Viking.

G8 Accountability: THe Civil Society EffectPeter I. Hajnal

Introduction

This paper examines the democratic accountability of the G8 with a particular focus on the role of civil society. It outlines for what and to whom the G8 is accountable; analyzes how and to what extent civil society engagement has, and has not, promoted G8 accountability; and reviews civil society interaction with the G8 and the effects of this nexus on the G8 accountability, reviewing various factors that have helped or hindered civil society's contributions to that end.

What is the G8?

Despite huge publicity surrounding the annual summits, the Group of Eight remains relatively little understood outside specialist circles. The G8 comprises what are usually called the major industrial democracies: Canada, France, Germany, Italy, Japan, Russia (with reservations about its democratic credentials), the United Kingdom, and the United States.

The G8 is an informal forum of global governance, distinct from international organizations based on a charter or other intergovernmental agreement, such as the United Nations (UN) or the World Trade Organization (WTO). Nor does it have a secretariat to carry on day-to-day implementation of policy decisions. Due to this lack of a formal framework, it has no mechanisms to regulate its relations with other actors.

But these limitations have not prevented substantial interaction between the G8 and civil society.

The origins of the G8 go back to several shocks to the world economic system in the early 1970s, notably the collapse of the Bretton Woods international monetary system based on fixed exchange rates and the quadrupling of oil prices by OPEC (Organization of the Petroleum Producing Countries) in 1973-74. To respond to these shocks, finance ministers from France, Germany, Japan, the UK and the USA began in 1973 a series of meetings. These led to summits of the leaders of these countries with the objective of reaching policy agreements in respect of the common challenges (1).

The first summit of the original five plus Italy was held in 1975 at the Château de Rambouillet, near Paris. Canada joined the club in 1976 to form the G7, and the European Union has participated since 1977. In 1998 Russia became a full member, creating the G8. More recently the leaders of five major emerging-economy states (Brazil, China, India, Mexico and South Africa) have joined parts of the summit proceedings; this configuration was first called the “G8+5”; in 2007 the “+5” were renamed the “Outreach 5” (“O5”).

The functions of the G7/G8 have also expanded over time. The main roles today, according to John Kirton, are deliberation, direction-giving, decision-making, and management of global governance and domestic politics (2). The summit allows the attending heads of state and government to exercise political leadership, reconcile domestic and international concerns, develop collective management, and integrate economics and politics in their negotiations and decisions.

Each G8 leader has a support apparatus led by a personal representative, known as a “sherpa”, and his team of two “sous-sherpas” (one for economic affairs and the other for financial matters), a political director, as well as logistical, security and other staff. Since 2001 each leader has also had an Africa Personal Representative. In the early years, delegations included the foreign and finance ministers, but following the organizational innovations of the 1998 Birmingham summit these ministers were detached from the leaders' summits and they now hold their own meetings which feed into the leaders' summits. These and other ministerial fora also hold their own series of meetings throughout the year. The G7/G8 has also created various task forces, expert panels and working groups, some of which have later expanded their membership beyond the G8 and developed quasi-independence.

G8 Accountability

The term accountability is used here in the sense of an actor's answerability for its actions or inactions to those who are affected by such actions and inactions. The issue of G8 accountability – particularly its democratic accountability – has received relatively little attention (3), yet the charge is often levelled that the G8 is not accountable. Accountability can be said to have the principal aspects of transparency, consultation, evaluation, and opportunities to redress wrongs or omissions.

For what is the G8 accountable? After the economic and financial focus of the early summits (1975-81), the agenda expanded substantially. Political and security issues became increasingly prominent in the period 1982-88. From 1989 other global issues were added: democratization, the environment, terrorism and transnational organized crime, development, poor country debts, infectious diseases, migration, food safety, energy, education, intellectual property, corruption, and various armed conflicts. The G8 can therefore be held to account for its actions and inactions in all those areas.

To whom can the G8 be held accountable? The stakeholders include the eight member governments and their citizens, and the global community as a whole, including marginalized groups. Mutual accountability also operates, with the G8 demanding others to answer to it at the same time that it answer to them.

There is relatively strong internal accountability within the G8 system; the leaders answer to their peers. When, at a summit, they undertake before their peers to accomplish a particular objective, they must again face those colleagues at future summits should they not comply with their commitments. As well, task forces and other subgroups must report back to the leaders or ministers when asked to do so.

As elected heads of state or government, individual G8 leaders are accountable to their own constituencies. This is fulfilled, for example, by regular post-summit reports given by UK prime ministers to Parliament or the Canadian government's follow-up reports on G8 initiatives on Africa. As well, host governments are accountable for public money spent on organizing summits and other G8-related actions. But on the whole G8 accountability through national elected legislators is still insufficient.

The G8, arguably, is also accountable to the global community as a whole since it is an instrument of global governance addressing global issues. The G8 leaders have undertaken to provide global public goods for the benefit of all. But even with the addition of Russia in 1998 and the “+5”/“Outreach 5” since 2005, the G8 is anything but representative of overall humanity. Inadequate representation has compromised G8 accountability (and perhaps legitimacy) (4). Several recent proposals have sought to remedy this imbalance. One initiative, advocated by former Prime Minister Paul Martin, would turn the G8 into an L20 (Leaders' 20) patterned on the G20 finance ministers' forum, but with a broader global agenda. Another proposal would incorporate the “Outreach 5” as regular members of an enlarged G13.

One way the G8 attempts to fulfil its accountability to wider humanity is by interacting with regional and global governance agencies. For a number of years, the G8 leaders have invited the administrative heads of international organizations to the summits for consultation: the UN Secretary-General, the Managing Director of the International Monetary Fund, the heads of the World Bank, the OECD (Organisation for Economic Co-operation and Development), the International Energy Agency (IEA) and the African Union. On issues where the G8 is unable or unwilling to act decisively, it tends to remit the task to an IGO (International Governmental Organization), for instance, by referring unresolved trade problems to the WTO. But such transfers of responsibility weaken G8 accountability.

Beyond this, the G8 has acknowledged for some time its responsibility to extend the benefits of globalization to marginalized groups, and to remedy economic and other inequalities. But G8 action has been uneven; some benefits have accrued to disadvantaged populations, but many G8 promises remain unfulfilled.

In spite of their own weak accountability, the G8 leaders expect accountability from other actors. For example, the 1995 Halifax summit called for "a more transparent and accountable [UN] Secretariat".

Civil Society Engagement with the G8

In this paper, “civil society” denotes not-for-profit nongovernmental organizations (NGOs), coalitions and mass movements. Some include business fora as well but this is problematic because the interests, modus operandi and influence of the private sector diverge from those of nonprofit civil society organizations (CSOs). G8 officials themselves distinguish between business players on the one hand and non-profit CSOs on the other.

Four distinct phases can be identified in the evolution of civil society-G8 relations:

Many kinds of NGOs and other civil society groups have engaged with the G8: environmental groups, human rights NGOs, development and relief agencies, mass campaigns, faith-based groups across Christian, Jewish, Muslim, Buddhist, Hindu and other traditions, groups focusing on various social and political issues, youth groups, CSOs focusing specifically on the G8, women's groups, trade unions, professional bodies, research groups and think tanks. The role of celebrities is notable; Bono and Bob Geldof are the best-known examples. As well, other prominent personalities such as Gro Harlem Brundtland and Stephen Lewis have spoken out about the G8.

CSOs tend to concentrate their activities in the summit host country. This has important implications; NGOs from other continents and other countries, particularly from the global south, often lack sufficient financial and human resources to travel to the summit venues. Civil society from the south is often represented by diaspora groups residing in the summit country.

Four modes of civil society interaction with the G8

One important example is the Global Fund to Fight AIDS, Tuberculosis and Malaria.

Civil society has helped raise government awareness of citizen concerns with issues on the G8 agenda and has occasionally stimulated government responses. And CSOs, when engaging in dialogue with official circles, have gained greater appreciation of what is and is not possible for governments to do in the G8 context. Civil society groups benefit from the availability of channels for advocacy vis-à-vis G8 governments. So both parties may be using each other while also benefiting from the interaction.

Four Dimensions of G8 Accountability

Transparency

G8 proceedings have become more open over time. The volume of publicly released documentation has grown significantly from the rather meager output of early summits. There has also been a general trend to disclose more substantial information, including detailed action plans. Media briefings by G8 officials before and during summits are another indication of increased transparency, marking a break from the relative secrecy of earlier years. CSOs have pressed the G8 on transparency for a number of years, but moves toward greater openness are difficult to attribute specifically to civil society activities.

The G8 has much more to do before reaching an adequate level of transparency. The detailed proceedings of the in camera meetings of the leaders remain confidential apart from strategic partial disclosures in off-the-record briefings. But not every G8 government briefs the public with equal diligence. The official archives of the member governments that hold the detailed information normally only become publicly available twenty-five or thirty years after the event, so that documentation of even the earliest G7 summits is only now coming to light.

Consultation

Civil society dialogue with officials of G8 governments is an important means of exchanging ideas and (occasionally) developing shared positions. Dialogue implies willingness to co-operate – not necessarily agree – with G8 governments.

Consultation became part of the regular G8 process with the 2000 Okinawa summit, when the Japanese host government met with civil society leaders from Europe ahead of the meeting, and at the summit itself the Japanese Prime Minister met with representatives of five NGOs to discuss the effects of globalization, the environment, infectious diseases, and the importance of partnership between governments and civil society.

Every subsequent G8 summit, with one exception, has included direct consultation between summit officials and CSOs; the US host government of the 2004 Sea Island summit was unwilling to engage with civil society.

A positive example was the 2006 Civil G8 coalition which organized a year-long series of workshops, meetings including two large NGO fora (one with the participation of President Putin), and sessions with all G8 sherpas.

The push by civil society actors for consultation has strengthened G8 accountability. G8 leaders and other officials are now well socialized into an established process of interchange with citizen groups. The leaders, particularly during their summit hosting year, have come to be expected to consult with civil society. Many CSOs have found consultation to be the most efficacious way of bringing their concerns and proposals directly to the G8.

G8 Performance Evaluation

Evaluative reports assess the G8's compliance with its commitments, acknowledge any advances made and point up failures to fulfil promises. Some evaluations measure performance in terms of a numerical score or a letter grade, while others present a narrative analysis. Assessments from civil society can be used to press the G8 to improve its performance and accountability to the broader global community. These assessments have had an effect; G8 governments now expect this kind of scrutiny, and the G8 itself has recently begun to undertake some self-monitoring.

Seeking Redress

Multi-stakeholder partnerships are potentially the most promising way of obtaining from the G8 redress or remedy for wrong actions or lack of beneficial actions. Examples of situations calling for redress are unfavourable trade conditions imposed on developing countries, and inadequate or misdirected official development assistance.

The various civil society tactics discussed earlier can serve the purpose of seeking redress. Street demonstrations are perhaps the most familiar to the public; the “Make Poverty History” march in Edinburgh in 2005 which attracted 250,000 participants is an important example.

Another tactic has involved petitions, such as the one assembled by Jubilee 2000 in 1998, when it collected signatures to urge the Birmingham summit to forgive all external debt of the poorest countries by the year 2000. The summit responded to Jubilee's petition in a collective statement, implying some acknowledgement of G8 accountability on debt matters.

Media campaigns are another tactic. Press releases and opinion pieces help bring civil society positions to public and government attention, and call for redress. Sympathetic media coverage of civil society concerns can serve to promote G8 accountability, but journalists often focus on the occasional incidents of violence or on “street theatre” rather than on important issues and peaceful action.

Alternative summits, too, have the potential to demand redress from the G8. But all in all, civil society has had limited effect on obtaining redress.

Factors Helping and Hindering Civil Society Engagement with the G8

What strategies and tactics have helped or hindered civil society's efforts to increase G8 accountability?

1. NGOs and civil society coalitions stand a much better chance to have an impact on the G8 when networking with like-minded groups. For example, the Global Campaign against Poverty has brought together a wide array of CSOs and movements concerned with all causes and aspects of poverty.

2. Civil society has been most effective when it recognized and exploited linkages between G8 issues. One positive example is the DATA group (Debt AIDS Trade Africa) which has highlighted the interconnectedness of these issues.

3. More successful CSOs have shown their readiness to be reactive or proactive, according to need. This implies, for example, taking advantage of issues on the G8 agenda that are also important to civil society, as well as lobbying to try to get other civil society concerns on the G8 agenda.

4. CSOs have been more successful in their relationship with the G8 when they recognized the G8 summit as being part of a continuum of major international meetings taking place in any given year. This has implications for continuing action around these other international fora: the UN, the WTO, the IEA, the WHO (World Health Organization) and other organizations.

5. Thorough knowledge of the G8 system and process is crucial for NGOs if they wish to have real impact on G8 accountability. This includes dialogue with the whole G8 system, including ministerial, task force and sherpa meetings, awareness of the timing and agenda of such meetings, and familiarity with G8 member governments' priorities and summit-supporting institutions.

6. Because G8 agenda-building is at least a year-long process, formulated and honed gradually from one summit to the next. CSOs can influence the G8 more successfully if they get involved in the process early.

7. It is a continual challenge for civil society to isolate potentially violent or disruptive elements. After 9/11, this has become even more crucial, and it calls for vigilance, self-patrolling and other efforts at G8 summits.

8. Certain CSOs choose not to engage with the G8, on grounds of resources or ideology. CSOs face difficult choices. Is it worth expending time and energy on dialogue and other interaction with G8 governments around summits and ministerial meetings? Is it worth giving up any influence on the G8 because the latter is perceived as illegitimate or not truly powerful?

9. When a host country is unwilling to interact with civil society, NGOs and other CSOs have other options to influence the G8: advocacy, policy papers, dialogue with receptive non-host G8 governments, and staging parallel events. National NGOs based in G8 countries are in a strong position to lobby their own government.

10. Finally, while G8 government initiatives toward civil society actors are important, civil society does not need to take its cues from government. CSOs have been more influential when they developed strategies on their own terms, rather than depending on G8 “outreach”.

Conclusion

G8 leaders can be held accountable for actions and inactions across a broad range of economic, political, environmental and other global issues. The leaders are individually accountable to their electorates as well as and to their fellow G8 leaders. The G8, as a powerful transgovernmental forum, is collectively accountable to the broader global community including marginalized groups, and to the various regional and global organizations with which they interact. Internal accountability is relatively strong within the broader G8 system, but weaker in democratic accountability to other actors.

In terms of performance on accountability, transparency of the G8 has increased over the years but remains inadequate. Civil society's influence on this is difficult to ascertain except for summit documents and briefings that explicitly recognize CSOs. Public consultation has become common practice and has increased G8 accountability, but the practice remains uneven across the G8. Monitoring and evaluation, including such efforts by civil society, have taken root but they need to become more systematic. Opportunities for obtaining redress for wrong actions or lack of beneficial actions are largely lacking; the few instances of successful multi-stakeholder partnerships have regularized consultations and are working for redress.

Overall, then, G8 accountability is generally still weak. Although civil society has had a major role in enhancing the various dimensions of accountability, this has not yet reached its potential.

Notes and References

1. Putnam, Robert D. and Bayne, Nicholas (1987). Hanging Together: Cooperation and Conflict in the Seven-Power Summits rev. ed. Cambridge, MA: Harvard University Press): 25-27.

2. Kirton, John J. (2006). A Summit of Significant Success: The G8 at St. Petersburg G8 Research Group Toronto 19 July, <www.g8.utoronto.ca/evaluations/2006stpetersburg/kirton_perf_060719.pdf >: 6)

3. Two exceptions are recent contributions by Ullrich on G8 accountability in respect of trade governance and by O'Manique in respect of global health and human rights. Ullrich, Heidi K. (2007). “Toward Accountability? The G8, the World Trade Organization and Global Governance”, in M. Fratianni, P. Savona and J.J. Kirton (eds), Corporate, Public and Global Governance: The G8 Contribution. Aldershot: Ashgate, pp. 99-125; O'Manique, Colleen (2007). “Global Health and Universal Human Rights”, in A.F. Cooper, J.J. Kirton and T. Schrecker (eds), Governing Global Health: Challenge, Response, Innovation. Aldershot: Ashgate, pp. 207-26.

4. Hajnal, Peter I. (2007). Summitry from G8 to L20: A Review of Reform Initiatives. CIGI Working Paper 20.

www.cigionline.org/community.igloo?r0=community&r0_script=/scripts/folder/view.script&r0_pathinfo=%2F%7B7caf3d23-023d-494b-865b-84d143de9968%7D%2FPublications%2Fworkingp%2Fsummitry&r0_output=xml&s=cc

Suzanne Hidi

A. Historical Background

Starting in the late 19th century, scientists Ebbinhaus and James came to realize the importance of interest in humans' cognitive performance. They acknowledged that interest strongly influences what people pay attention to and remember. In the first part of the 20th century the role of interest in improving comprehension, stimulating effort and personal improvement, as well as facilitating learning was recognized by individuals like Dewey (1913), Arnold (1906), Claparde (1905) and others. Berlyn (1949) may have been the first to point out that feelings are an important aspect of interest.

As many of my colleagues and I argued in the second half of the 20th century the significance of affective and motivational variables in general, and of interest in particular, declined, first due to behaviourism and subsequently to the so-called cognitive revolution. However, in the last 25 years, both psychologists and neuro-scientists started to focus again on affective variables, emotions and feelings. Interest was again acknowledged to be a critical motivational variable that influences learning and achievement.

B. Revival of Interest Research

In the latter part of the 20th century, Hans Schiefele, a German educational psychologist, was perhaps the first to argue that interest has a pivotal role in education. Specifically, he maintained that the differentiation, development and stabilization of academically relevant interests should be one of the most important goals of education.

C. Research Findings

Since the revival of interest as an academically relevant concept, a set of wide-ranging empirical studies showed the positive influences of interest on attentional processes, quantity and quality of learning, and the choice and organization of learning strategies, goals and persistence. These findings have been published in a wide variety of papers, journals and books. However, in order to be able to interpret the empirical findings there is a need to discuss on a more theoretical level the conceptualization of interest.

D. Definitions of Interest

What is the most appropriate definition of interest? A momentary fixation? Attraction? Or a fascination? A preference or an attitude? A love of learning or a passion? Traits such as curiosity or motivational belief? Whereas all these conceptualizations have been suggested by various researchers, my colleagues and I consider interest to be a unique motivational variable, as well as a psychological state occurring during interactions between individuals and their environment, characterized by increased attention, concentration and affect. In addition, the term interest can also refer to relatively enduring predispositions to re-engage with particular contents such as objects, events and ideas. Such predispositions develop from experiencing the psychological state of interest over time.

E. Unique Characteristics of Interest

Why do we consider interest to be a unique motivational variable? In contrast to cognitively driven motivational variables such achievement goals, self-efficiency, task-value and self-determination that are viewed as having affect as a cognitive outcome, interest theory conceptualizes interest as having both affective and cognitive components. Conceptualizing affect as an inherent component of interest is one aspect that sets interest apart from the other motivational variables that tend to consider affect as outcome of the representational aspects of motivation and cognitive processing. It also allows the integration of psychological and neuro-scientific approaches. The other aspect that sets interest apart from other motivational variable is that interest has unique biological roots. Specifically, as the neuroscientist Panksepp's work demonstrates, the uniqueness of interest as a motivational variable is related to an evolutionary and genetically ingrained emotional brain system called the seeking system.

F. Interest Types

Two types of interest – situational and individual – have been the focus of research. To demonstrate the triggering of situational interest, think of listening to a lecture on an unfamiliar topic that you unexpectedly find fascinating. This experience is what we call situational interest. In this psychological state, one usually experiences positive affect and focused attention. Once situational interest is triggered, it may not last. Alternatively, it may be maintained. Activities such as asking questions or reading more about the topic can contribute to continuing situational interest.

Reading this article, those of you who previously considered motivational issues in education, may experience what we call individual interest. This type of interest develops over time and is a relatively enduring predisposition to attend to and to reengage with objects, events, and ideas. Individual interest is also associated with positive feelings, increased value and knowledge, energizing and motivating learners' thoughts and actions in goal-directed ways. It is important to understand that both situational interest and individual interest can be associated with the psychological state of interest.

G. The Four Phase Model of Interest Development

Building on and extending existing research, I and others presented a four-phase model of interest development. The proposed model (a) provides a description of how interest unfolds, (b) points to the need for researchers to identify the type of interest they are investigating, and (c) suggests ways in which educators and parents could contribute to interest development. Briefly, the four phases are triggered situational interest, maintained situational interest, emerging (or less-developed) individual interest, and well developed individual interest. My colleague and I summarized the model as follows:

"Each phase of interest can be characterized by varying amounts of affect, knowledge, and value. The length and character of a given phase is likely to be influenced by individual experience, temperament, and genetic predisposition. The four phases are considered to be sequential and distinct, and represent a form of cumulative, progressive development in cases where interest is supported and sustained either through the efforts of others or because of challenges or opportunity that a person sees in a task. However, without support from others, any phase of interest development can become dormant, regress to as previous phase, or disappear altogether."

Self-regulation is presumed to increase with individual interest development.

Introduction

a) Definition

Self-regulation refers to the ways in which individuals regulate their thoughts and actions. Self-regulation of learning refers specifically to those self-generated operations that focus on individuals' acquisition of academically relevant knowledge and skills. Whereas earlier self-regulation research focused on individuals' ability to be active participants of their own cognitive, motivational, and behavioral processes, more recently the capacity to regulate affect has been included as a critical aspect of self-regulation.

b) Zimmerman's Model of Cyclic Phase of Self-Regulation

Zimmerman postulated that self-regulatory processes and associated self-motivational beliefs influence learning in three successive cyclic phases of forethought, performance, and self-reflection, and each phase can be characterized by various sub-processes of self-regulation. Self-motivational beliefs, one of the two major categories of the forethought phase, include interest. Self-efficacy, outcome expectations, and goal orientation are the other motivational constructs that are referred to as self-motivational beliefs. The researchers acknowledged that interest influences the forethought phase.

c) The four developmental levels of self-regulation

Notably, the development of self-regulatory skills has been postulated to have four stages. These are observing a model, emulating the performance, exercising self-control by planning and monitoring ones own performance, and finally self-regulating and adopting to changing internal and external conditions.

Interest and Self-Regulation: Reciprocal Interdependence

Although interest and self-regulation tended to be investigated independently, these two variables are closely associated and reciprocally interdependent. Similar positive outcomes have been associated with interest and self-regulation, such as increased attention, superior selection of goals and learning strategies and higher levels of learning.

Furthermore, as empirically demonstrated, they are both linked to perceptions of self-efficacy. Even though self-regulation can be taught and may occur without high levels of interest, the development and maintenance of self-regulatory skills can be greatly enhanced by learners' interest in relevant activities. Furthermore, interest development in activities may contribute to the development of self-regulatory skills by resulting in less conscious goal-direction, triggering more automatic processes and leading to greater overall effort.

Self-regulation researchers like Zimmerman and Pintrich acknowledged that interest contributes to the learning of self-regulatory processes and to the maintenance of such acquired skills, although they tended to focus on goals as the most relevant motivators of self-regulation. That is, they argued that how much learners valued and expected to attain goals and mentally represent them is the critical influence on individuals' motivation to self-regulate. Whereas these researchers acknowledged the importance of interest in the forethought phases of self-regulation, they did not recognize the importance of interest in the performance stage. That is, the psychological state of interest during an activity with concurrent physiological changes – such a positive feeling, and increased dopamine levels – may contribute the development of self-regulation. Interest researchers go as far as arguing that interest development can occur before having cognitively represented goals and can play a unique role specifically in the development of self-regulation, as well as in all forms of knowledge acquisition and performance.

Cancer"Screening for Cancer: Are We chasing an Elusive Dream?

Anthony B. MillerIt seems self-evident that early detection of breast cancer will reduce deaths from the disease. There are two forms of early detection. The first is accomplished by breast cancer awareness, education about the risk of cancer and the promotion of breast self examination. These measures resulted in a tendency for tumours to be diagnosed at a less advanced stage, and the introduction of lumpectomy instead of mastectomy for many cancers found in this way. The second component of early detection is screening, the administration of a test to find disease before symptoms have developed. But screening has many components, and has not proved entirely effective, as I shall show.

What do we expect from screening? Principally, reassurance that we do not have cancer. However, if we do have cancer, we hope that early detection of the cancer yields the possibility for curing it.

Conversely, what do we NOT expect from screening? Certainly we do not expect to be told that we do not have cancer when we do. Yet, it must be understood that no one can promise absolute certainty from a screening test. All tests will miss some cancers that are in fact present at the time of the test. But also, we do not expect detection of a “cancer” that will never cause us any harm. Yet this is what nearly all screening tests do, a process we call over-diagnosis.

In this talk I plan to describe some of the deceptions that have unwittingly been practiced on a gullible public, not because there is any “plot” to deceive people, but because very often, those advocating screening have an imperfect understanding of its many deficiencies.

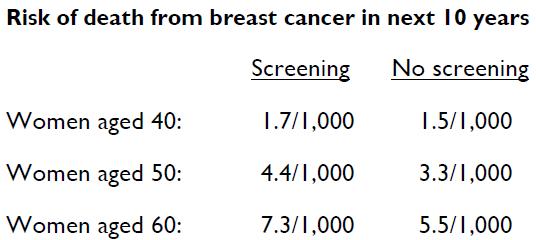

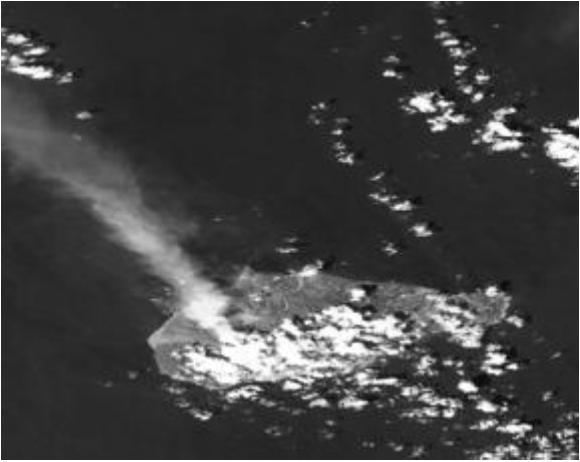

To take the example of breast cancer. Since about 1990, there has been a remarkable drop in mortality from breast cancer (the death rate from breast cancer in the population) in many countries, especially the UK, the USA and Canada (Figure1). Most commentators have attributed this success to mammography screening, though a few also credit improvements in therapy. In fact the time relationships of the fall with the introduction of screening by mammography in Canada make no sense at all. Few screening programs had been introduced by 1990, and many did not affect a high proportion of the population before 1996. And the scientific trials that showed screening was effective also showed that there is a 5-7 year delay after starting screening before a reduction in breast cancer deaths is seen. The alternative explanation for the mortality drop is improvements in therapy, especially the introduction of adjuvant hormone therapy (tamoxifen) in post-menopausal women, and adjuvant chemotherapy, in pre-menopausal women. As these therapies were introduced in the mid-1980s, a reduction in mortality starting around 1990 is entirely compatible with improved treatment.

Figure 1: Trends in Mortality from Breast Cancer in Canada: age-standardized rates per 100,000 women

We must remember that screening only works if an effective treatment for the cancer discovered by the screening test is available. This probably explains the fact that until 1990, after the introduction of the new treatments in the 1980s, there had been no reduction in breast cancer mortality, in spite of the earlier detection occurring during the previous two or more decades. But if we become able to cure all cancers with treatment, there will be no role for screening. So as treatment improves, the role of screening becomes less important.

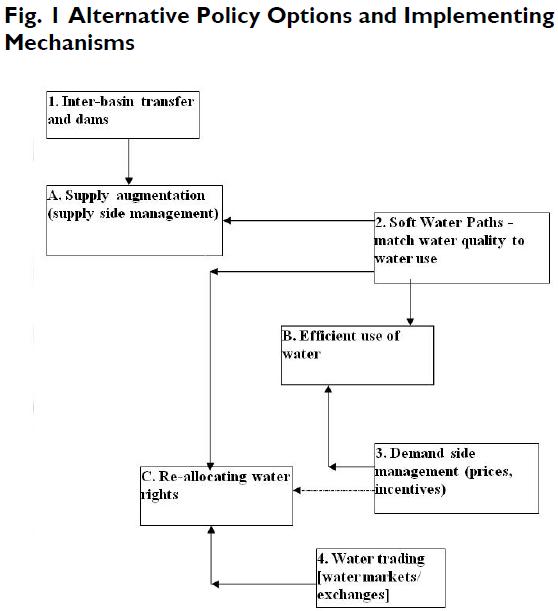

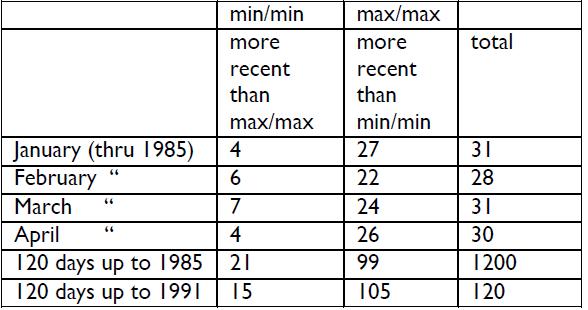

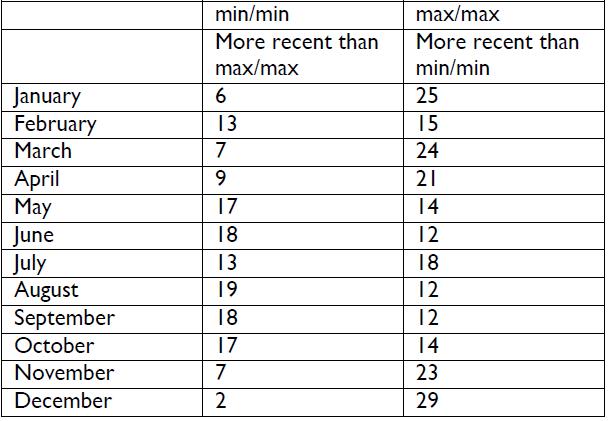

Why do people credit screening with the recent fall? One of the reasons is that an expert group assembled by the International Agency for Research on Cancer in 2002 concluded that for women aged 40–49, mammography screening reduced risk of death from breast cancer by 12%, and for women aged 50–69 the reduction was 25% (IARC, 2002). These figures seem impressive, even though they are less so for younger women. But they are far less impressive when you relate them to the actual risk of death at different ages as in the following table:

Yet women invited to be screened are never told that the benefit for them may be vanishingly small.

In Canada, the National Breast Screening Study (CNBSS)-2 directly evaluated the role of mammography screening in women age 50-59, over and above any benefit derived from careful physical examination of the breasts; the latter was performed in all provinces except Quebec by trained nurses. In Quebec, doctors performed the examinations. 39,405 volunteers were randomized after informed consent to either the MP arm: annual two-view mammography + physical breast examination + breast self-examination (BSE); or to the PO arm: annual physical breast examination only + BSE. Four or five annual screens were conducted, and currently 16 years of follow-up are available. Of the invasive breast cancers detected by screening in the MP arm, 126 were detected by mammography alone with an additional 141 detected by mammography plus physical examination or by physical examination alone. In the PO arm 148 were detected by the physical examinations. Of the non-invasive in situ cancers, 73 were detected in the MP arm, the large majority by mammography, compared to only 16 in the PO arm. In spite of this excess of cancers found by mammography, there has been no impact on breast cancer mortality during follow-up (Miller et al, 2000).

These negative findings were greeted by the radiology community with accusations that the mammograms were poor – however this ignored the near doubling of cancer detection rates achieved by mammography, and the fact that cancer detection was if anything superior to the rates achieved in other screening programs. Recently, in collaboration with Erasmus University, Rotterdam, a validated simulation model was applied to our data, and this indicated that mammography resulted in a 16-36% reduction in breast cancer mortality and the physical examinations resulted in a 20% reduction in breast cancer mortality in comparison to no screening (Rijnsburger et al, 2004).

One outcome of the Canadian trial was to provide a scientific basis for an alternative approach to breast screening that is currently being evaluated in a number of low and middle income developing countries, following a pilot study that commenced in 2000 in Cairo (Boulos et al, 2005). There are already preliminary indications from this study that a shift towards a more favourable stage distribution (earlier stage at diagnosis) is being achieved (Miller, 2008). Through the Eastern Mediterranean Region of the World Health Organization similar studies have been initiated in Sana'a, the Yemen, Khartoum, Sudan, Erbil, Iraq and Yazd, Iran. Each program is modifying the project to its own culture and facilities.

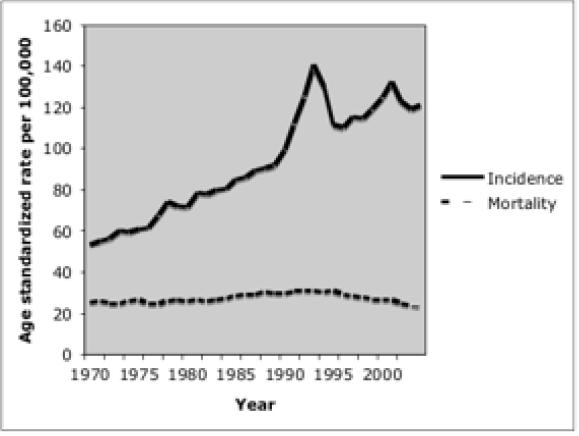

I turn now to consider screening for Prostate Cancer, increasingly a topic for public discussion, especially now the Ontario government has decided to pay for PSA blood tests. There has been a major increase in incidence of prostate cancer in Canada, largely because of the PSA test, but there has been little impact on prostate cancer mortality (Figure 2).

Figure 2: Trends in Incidence and Mortality from Prostate Cancer in Canada: age-standardized rates per 100,000 males.

There are two prostate screening research trials ongoing and both began in 1993. The US trial recruited 76,705 men, screening has finished and follow-up for some participants exceeds 13 years. The European trial is larger (~200,000). Neither trial has been stopped, nor have any mortality results been reported, which they would have been if a significant benefit had been seen. So we can conclude there is no early benefit from screening for prostate cancer.

Yet data are accruing from these trials that give us more understanding of the potential adverse effects of screening. Estimates from the Rotterdam component of the European trial show that lead time (the time by which diagnosis of prostate cancer is advanced by PSA testing compared to when diagnosis would have occurred in the absence of screening), is on average 11.2 years, while over-diagnosis occurs in 48% of the cancers detected, i.e. these cancers would never have presented in the subjects' lifetime in the absence of screening (Draisma et al, 2003).

The implications of long lead times and over-diagnosis are serious for a man age 65, if the use of PSA results in detection of a prostate cancer. There is nearly a 1 in 2 chance that the detection of the cancer was unnecessary. The man will live all his remaining years with the knowledge of a cancer diagnosis. Yet there is no evidence of benefit, so it is very likely that the impotence and incontinence resulting from his surgery are unnecessary.

In a recent book, Raffle and Gray (2007), both concerned with the national screening programs in the UK, have coined the term the “Popularity Paradox” to cover this situation, “The greater the harm through over-diagnosis and over-treatment from screening, the more people there are who believe they owe their health, or even their life, to the programme.”

Screening for cancer does seem to work if cancer precursors can be detected and treated. The best example is the Pap smear for cancer of the cervix, introduced for screening first in British Columbia in 1949. In practice, for about two decades, mortality from the disease has been falling in most countries, with reductions since 1950 of about 80% in countries with the most successful programs, including Canada and the USA (Figure 3).

Figure 3: Trends in mortality from Cancer of the Cervix: age-standardized rates per 100,000

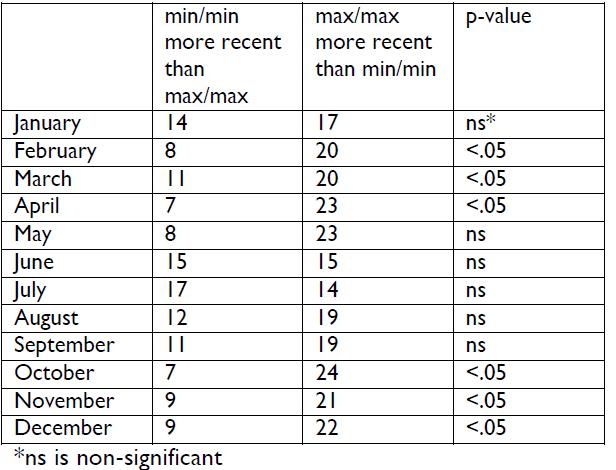

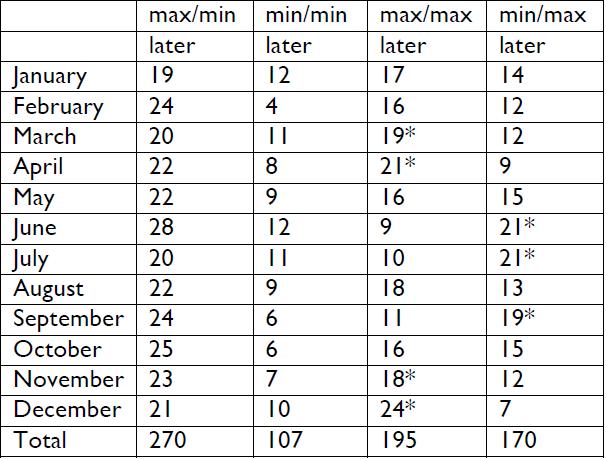

However, the reduction achieved has been as great in Finland as in Canada and the USA, even though in Finland screening is offered only every 5 years, and only to women age 30-59, while in the USA and Canada screening tends to start soon after onset of sexual activity, and is often done annually. Thus in terms of mortality reduction, although the US and Canada have done as well, this success has been at the cost of far greater resources expended, largely because the lessons we have learnt about the natural history of the disease have not been applied.